Open Science

Vaclav (Vashek) Petras

NCSU GeoForAll Lab

at the

Center for Geospatial Analytics

North Carolina State University

GIS 710: Geospatial Analytics for Grand Challenges

October 27, 2025

Learning Objectives

- Understating motivation for practicing open science

- Understating openness

- Understating complexity of practicing open science

- Critical thinking about pros, cons, and challenges

- General understanding of tools and services involved

- Practical knowledge of tools for sharing research and computations

- Ideas about how to use them in complex geospatial applications

- Center's open projects and approach

Vaclav (Vashek) Petras

- Center: Director of Technology

- GRASS: Core Development Team, Project Steering Committee

- OSGeo: Charter Member

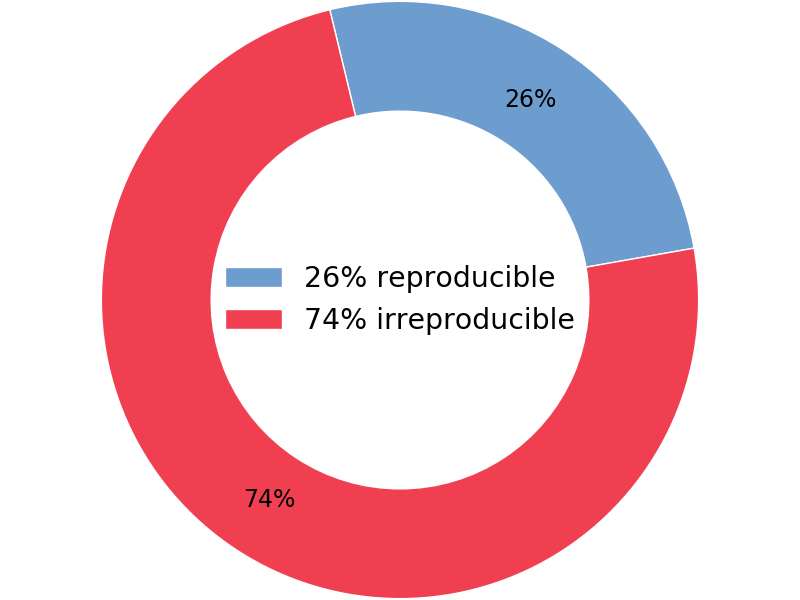

Reproducibility of Computational Articles

Stodden et al. (PNAS, March 13, 2018)

204 computational articles from Science in 2011–2012

Stodden, V., Seiler, J., & Ma, Z. (2018).

An empirical analysis of journal policy effectiveness for computational reproducibility.

In: Proceedings of the National Academy of Sciences

115(11), p. 2584-2589.

DOI 10.1073/pnas.1708290115

Stodden, V., Seiler, J., & Ma, Z. (2018).

An empirical analysis of journal policy effectiveness for computational reproducibility.

In: Proceedings of the National Academy of Sciences

115(11), p. 2584-2589.

DOI 10.1073/pnas.1708290115

Ostermann, F.O., Nüst D., Granell, C., Hofer, B., Konkol, M. (2020): <15% articles had available materials for Methods and Results (75 articles from GIScience conference, 2012-2018)

Discussion questions: Do you know about similar studies? What do they say?What Gets into Papers?

More than 20% of chemistry researchers have deliberately added information they believe to be incorrect into their manuscripts during the peer review process, in order to get their papers published.

— 1 in 5 chemists have deliberately added errors into their papers during peer review, study finds. Chemical & Engineering News (C&EN). October 20, 2025. Access Date: 2025-10-24

Openness

What Open Means

- The Open Definition

- Knowledge is open if anyone is free to access, use, modify, and share it — subject, at most, to measures that preserve provenance and openness. [as officially summed up]

- More than one term in use: open, free, libre, FOSS, FLOSS

- Free Cultural Works

- The Open Source Definition

- The Open Source AI Definition

- The Free Software Definition

- The Debian Free Software Guidelines and the Debian Social Contract

Image: “Free beer bottles” by free beer pool (CC BY 2.0)

Discussion questions: What is the difference between “free as in free beer” and “free as in freedom”? Have you seen “open” being used for something not fulfilling the Open Definition?

Four Freedoms in the OSI Open Source AI Definition

An Open Source AI is an AI system made available under terms and in a way that grant the freedoms to:- Use the system for any purpose and without having to ask for permission.

- Study how the system works and inspect its components.

- Modify the system for any purpose, including to change its output.

- Share the system for others to use with or without modifications, for any purpose.

Four Freedoms in the GNU Philosophy

A program is free software if the program's users have the four essential freedoms:- The freedom to run the program as you wish, for any purpose (freedom 0).

- The freedom to study how the program works, and change it so it does your computing as you wish (freedom 1). Access to the source code is a precondition for this.

- The freedom to redistribute copies so you can help others (freedom 2).

- The freedom to distribute copies of your modified versions to others (freedom 3). By doing this you can give the whole community a chance to benefit from your changes. Access to the source code is a precondition for this.

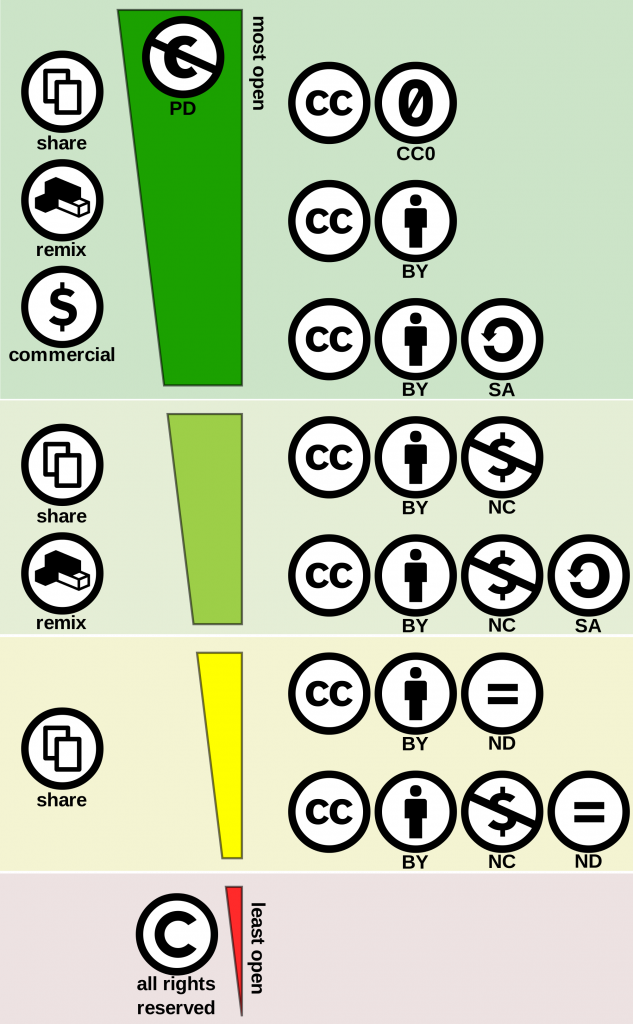

Licensing

- Creative Commons (CC) licenses

- GNU licenses, BSD licenses, ...

- e.g., GNU GPL 3.0

- Choosing a license and lists of licenses:

- choosealicense.com (for software)

- creativecommons.org/choose (CC licenses; for anything except software)

- License Selector (software and CC licenses)

- OpenAIRE (data license Q&A)

- Open Data Commons

- Open Source Initiative

- GNU (Free Software Foundation)

“Creative Commons License Spectrum” by Shaddim (CC BY 4.0), Creative Commons: Understanding Free Cultural Works

Discussion questions: Do you read “terms and conditions”? Have you ever read any “terms and conditions” or end user license agreement (EULA)? What about an open source software license?

Open Science Components

- 6 pillars [Watson 2015]:

- open methodology

- open access

- open data

- open source (software)

- open peer review

- open education (or educational resources)

- other components:

- open hardware

- open formats

- open standards

- open source AI

- related concepts:

- Open-notebook science

- Provenance

- FAIR, CARE, …

- Science 2.0 (like Web 2.0)

- Team science

- Citizen science

- Public science

- Participatory research

- Open innovation

- Open organization

- Crowdsourcing

- Preprints

- Inner source

Discussion questions: What would add to the list? What do you see something for the first time? What is openwashing?

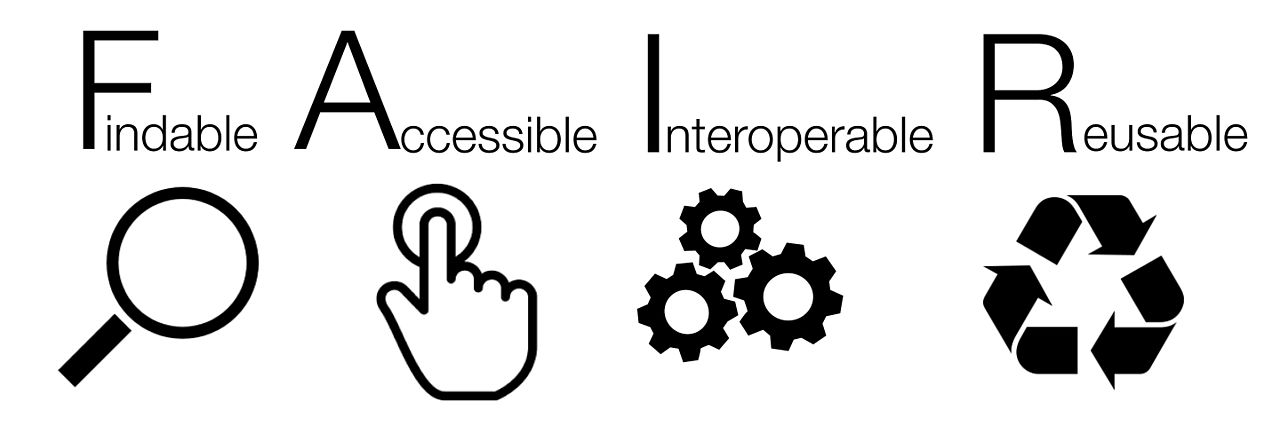

FAIR Principles

- Findable persistent identifier and metadata for data

- Accessible sharing protocol is open, free, and universally implementable

- Interoperable formal language and references to other datasets

- Reusable clear usage license (can be restricted), detailed provenance

The principles emphasize machine-actionability (…) because humans increasingly rely on computational support to deal with data (…)

[Wilkinson 2016]https://www.go-fair.org/fair-principles

Reactions: FAIREr (Explorability), FAIRER (Equitable and Realistic or Revisible), CARE (Collective Benefit, Authority to Control, Responsibility, Ethics), Good Data

Image: “FAIR guiding principles for data resources” by SangyaPundir (CC BY-SA 4.0)

Discussion questions: Which parts are unique to FAIR and not present in open science? Is source code part of data, data provenance, or it is a separate thing?

The “re” Words

No agreement on some of the definitions especially in different fields; definitions are often overlapping or swapped, some don't make any distinction.- replicability independent validation of specific findings

- repeatability same conditions, people, instruments, ... (test–retest reliability)

- reproducibility same results using the same raw data or same materials, procedures, ...

- recomputability same results received by computation (in computational research)

- reusability use again the same data, tools, or methods

Discussion questions: As a PhD student, which of these features would you like to see in other research? As a journal paper reviewer, what should you be able to do when you receive a scientific publication for review?

Computational and Geospatial Research

- code is a part of method description [Ince et al. 2012, Morin et al. 2012, Nature Methods 2007]

- use of open source tools is a part of reproducibility [Lees 2012, Alsberg & Hagen 2006]

- easily reproducible result is a result obtained in 10 minutes [Schwab et al. 2000]

-

geospatial research specifics:

- some research introduces new code

- some research requires significant dependencies

- some research produces user-ready software

Discussion questions: Is spatial special? Is recomputing the results useful for research? How long should it take to recompute results? Do dependencies need to be open source as well?

Open Science Publication: Use Case

Petras et al. 2017

Petras, V., Newcomb, D. J., & Mitasova, H. (2017). Generalized 3D fragmentation index derived from lidar point clouds. In: Open Geospatial Data, Software and Standards 2(1), 9. DOI 10.1186/s40965-017-0021-8

Open Science Publication: Components

| Publication Component | in the Petras et al. 2017 use case |

|---|---|

| Text | background, methods, results, discussion, conclusions, … (OA) |

| Data | input data (formats readable by open source software) |

| Reusable Code | methods as GRASS tools (C & Python) |

| Publication-specific Code | scripts to generate results (Bash & Python) |

| Computational Environment | details about all dependencies and the code (Docker, Dockerfile*) |

| Versions | repository with current and previous versions* (Git, GitHub) |

* Version associated with the publication included also as a supplemental file.

Petras, V. (2018). Geospatial analytics for point clouds in an open science framework. Doctoral dissertation. URI http://www.lib.ncsu.edu/resolver/1840.20/35242Discussion questions: What are other technologies which are good fit for these components? Are there other components or categories? What parts of research did you publish or tried to publish and what challenges did you face?

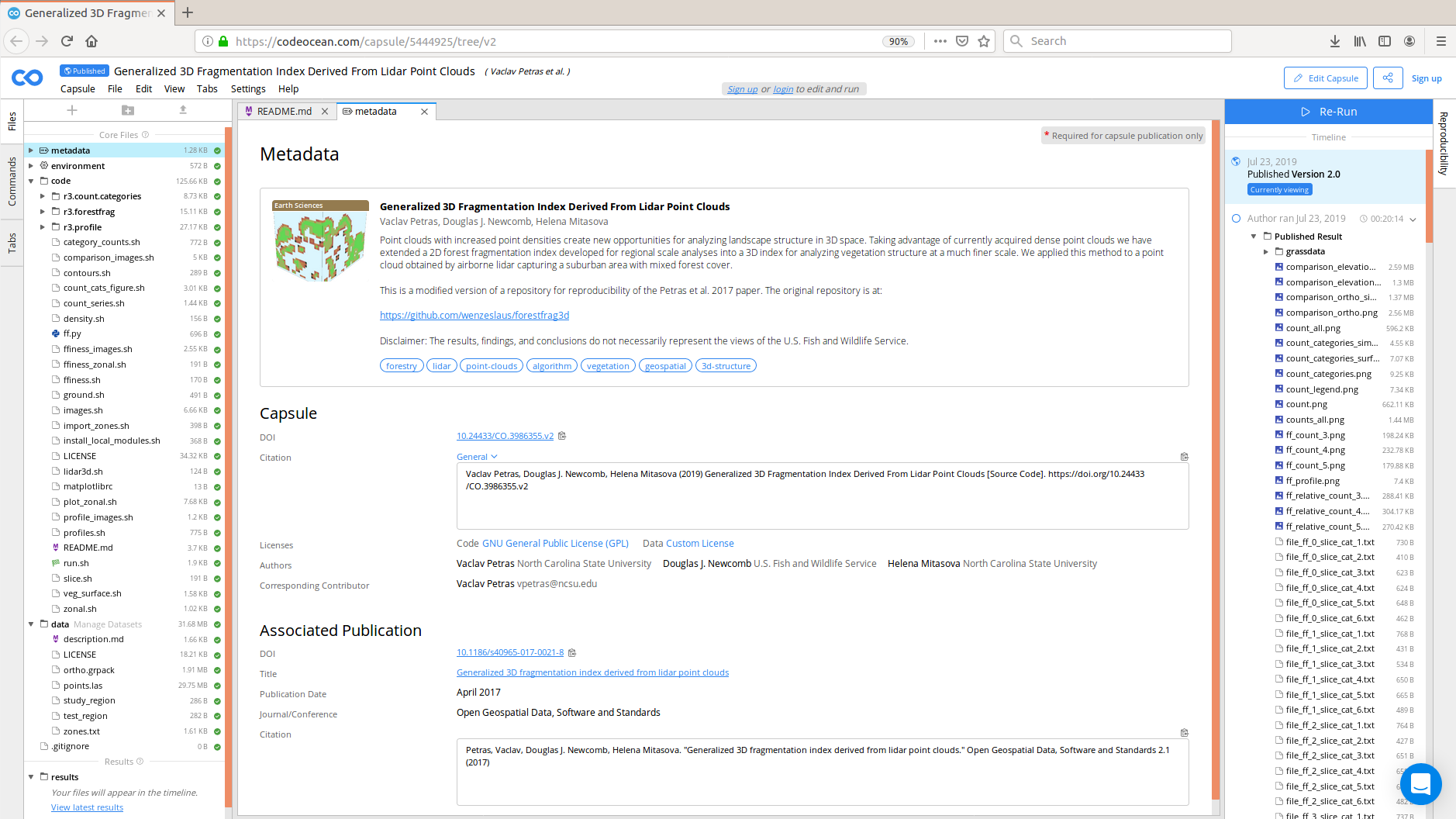

Open Science Publication: In a Single Package Online

- Components other than Text and Versions for Petras et al. 2017 are now also available at Code Ocean as a capsule.

DOI 10.24433/CO.3986355.v2

Discussion questions: What is the skill set needed to publish results like this? What is the long-term sustainability of online recomputability tools such as Code Ocean?

Open Science Publication: Software Platform

- Preprocessing, visualization, and interfaces (GUI, CLI, API)

- Data inputs and outputs, memory management

- Integration with existing analytical tools

- Preservation of the reusable code component (long-term maintenance)

- Dependency which would be hard to change for something else

- Example: FUTURES model implemented as a set of GRASS tools (r.futures.pga, r.futures.demand, r.futures.parallelpga, ...)

Discussion questions: What software can play this role? What are the different levels of integration with a piece of software and their advantages and disadvantages?

Challenges

Focus on Novelty in Publishing and Funding

It would be preferable to have the time to do it right, while simultaneously allowing scientists to be human and make mistakes, instead of focusing on novelty, being first and publishing in highly selective journals.Discussion questions: What research gets published? What research gets funded?

Holding, A. N. (2019). Novelty in science should not come at the cost of reproducibility. The FEBS Journal, 286(20), 3975–3979. DOI 10.1111/febs.14965

Lack of Incentives for Reproducibility

[...] irreproducible research [...] careers [...] personal cost: young scientists [...] their families [...] visas that are conditional [...] Running out of time [...] pressure on early-career researchers to deliver high-impact results. The outcome is an environment that pushes people to get across the line as quickly as possible, while the incentives to challenge or to reproduce previous studies are minimal.Discussion questions: How important is to challenge or reproduce previous studies? How important is being able to reproduce your own studies?

Holding, A. N. (2019). Novelty in science should not come at the cost of reproducibility. The FEBS Journal, 286(20), 3975–3979. DOI 10.1111/febs.14965

Scooping

‘There is always this fear, that someone steals your ideas, or is doing the same thing at the same time, and some people fear it more than other people, I think especially younger people, also some older. I think this causes a lot of stress to the scientists, and it has happened to me. […] you try not to think about it, you still think that what if someone else is doing the same thing and this is useless work, so then it takes your energy.’Discussion questions: What is scooping (being scooped) in science? Are you afraid of it?

— research participant in

Laine, Heidi (2017). Afraid of scooping: Case study on researcher strategies against fear of scooping in the context of open science. Data Science Journal. DOI 10.5334/dsj-2017-029

PLOS publishes scooped research and negative and null results

Content Creation and GenAI

Well-constructed AI systems generally do not regenerate, in any nontrivial portion, unaltered data from any particular work in their training corpus.Discussion questions: What have you seen an LLM or other GenAI generate? Was there a copyright or IP issue?

— OpenAI, LP. Before the United States Patent and Trademark Office, Department of Commerce. Comment Regarding Request for Comments on Intellectual Property Protection for Artificial Intelligence Innovation. Docket No. PTO–C–2019–0038

Copyright, Licensing, and GenAI

…GitHub Copilot trains on non permissive licenses… It should be worrisome how easily GitHub Copilot spits out GPL code without being prompted adversarially, independent of what they and other researchers claim.Discussion questions: Are LLMs/GenAIs different from text and data mining tools? Is copyright obsolete or should it be more enforced?

— GitHub Copilot Emits GPL. Codeium Does Not. Windsurf Team. Apr 20, 2023. Accessed 2025-10-24.

GenAI and Open Source Infrastructure

Once AI training sets subsume the collective work of decades of open collaboration, the global commons idea, substantiated into repos and code all over the world, risks becoming a nonrenewable resource, mined and never replenished. … What makes this moment especially tragic is that the very infrastructure enabling generative AI was born from the commons it now consumes. Free and open source software built the Internet… Every cloud provider, every hyperscale data center, every LLM pipeline sits on a foundation of FOSS.

— Sean O'Brien, founder of the Yale Privacy Lab at Yale Law School in Why open source may not survive the rise of generative AI by David Gewirtz. ZDNET. Oct. 24, 2025. Accessed 2025-10-27.

Private Data

[...] releasing datasets as open data may threaten privacy, for instance if they contain personal or re-identifiable data. Potential privacy problems include chilling effects on people communicating with the public sector, a lack of individual control over personal information, and discriminatory practices enabled by the released data.Discussion questions: Do you use or create personal or private data in your research or do you expect you will?

Borgesius, F. Z., Gray, J., & van Eechoud, M. (2016). Open Data, Privacy, and Fair Information Principles: Towards a Balancing Framework. 10.15779/Z389S18

Sensitive Data

Insights obtained by compiling public information from Open Data sources, may represent a risk to Critical Infrastructure Protection efforts. This knowledge can be obtained at any time and can be used to develop strategic plans of sabotage or even terrorism.Discussion questions: Do you use or create sensitive data in your research or do you expect you will?

Fontana, R. (2014). Open Data analysis to retrieve sensitive information regarding national-centric critical infrastructures. http://open.nlnetlabs.nl/downloads/publications/...

Publishing Source Code with a Paper

[...] that’s going to be harder. [...] I’m expecting to get screenshots of MATLAB procedures and horrible Python code that even the author can’t read anymore, and I don’t know what we’re going to do about that. Because in some sense, you can’t push too hard because if they go back and rewrite the code or clean it up, then they might actually change it.Discussion questions: Have you ever broadly shared source code or other internal parts of your work?

— An interviewed journal editor-in-chief in

Sholler, D., Ram, K., Boettiger, C., & Katz, D. S. (2019). Enforcing public data archiving policies in academic publishing: A study of ecology journals. Big Data & Society, 6(1). DOI 10.1177/2053951719836258

Open Source Software and Research Funding

“That’s really the tragedy of the funding agencies in general,” says Carpenter. “They’ll fund 50 different groups to make 50 different algorithms, but they won’t pay for one software engineer.”Discussion questions: What open source software which high-relevant to research do you know? Any idea about how it is funded?

— Anne Carpenter, a computational biologist at the Broad Institute of Harvard and MIT in Cambridge in

Nowogrodzki, Anna (2019). How to support open-source software and stay sane. Nature, 571(7763), 133–134. DOI 10.1038/d41586-019-02046-0

Open Source Software and Government

Discussion questions: Do you know GRASS?[around 1990] [...] GIS industry claimed that it was unfair for the Federal Government to be competing with them.

Westervelt, J. (2004). GRASS Roots. Proceedings of the FOSS/GRASS Users Conference. Bangkok, Thailand.In 1996 USA/CERL, [...] announced that it was formally withdrawing support [...and...] announced agreements with several commercial GISs, and agreed to provide encouragement to commercialization of GRASS. [...] result is a migration of several former GRASS users to COTS [...] The first two agreements encouraged the incorporation of GRASS concepts into ESRI's and Intergraph's commercial GISs.

Hastings, D. A. (1997). The Geographic Information Systems: GRASS HOWTO. tldp.org/HOWTO/GIS-GRASS

Original announcement: grass.osgeo.org/news/cerl1996/grass.html

Motivation

Theoretical Publishing Goals

- registration so that scientists get credit

- archiving so that we preserve knowledge for the future

- dissemination so that people can use this knowledge

- peer review so that we know it's worth it

Discussion questions: How are these publishing goals fulfilled by journal papers?

Internal Reasons for Open Science

- Open science in your lab (team-oriented reasons):

- collaboration work together with your colleagues

- transfer transfer research between you and your colleague

- longevity re-usability of parts of research over time

- Open science by yourself (“selfish” reasons):

- revisit revisit or return to a project after some time

- correction correct a mistake in the research

- extension improve or build on an existing project

Discussion questions: What is your experience with getting back to your own research or continuing research started by someone else? (See PhD Comics: Scratch.) How does open science relate to team science? How making things public can help us to achieve the desired effect and what challenges that brings?

Resolution for Class Debate

A scientific publication needs to consist of text, data, source code, computational software environment, and reviews which are all openly licensed, in open formats, checked during the submission process, and publicly available without any delay at the time of publication.Consider: Funding, workload, scooping, AI/GenAI/LLMs, tax payers, industry, missions of funding agencies, …