height = 600;

width = 800;

THREE = {

const THREE = window.THREE = await require("three@0.130.0/build/three.min.js");

await require("three@0.130.0/examples/js/controls/OrbitControls.js").catch(() => {});

await require("three@0.130.0/examples/js/loaders/OBJLoader.js").catch(() => {});

await require("three@0.130.0/examples/js/loaders/GLTFLoader.js").catch(() => {});

return THREE;

}

// Initialize scene

scene = {

const scene = new THREE.Scene();

return scene;

}

// Initialize renderer

renderer = {

const renderer = new THREE.WebGLRenderer({ antialias: true });

renderer.setSize(width, height);

return renderer;

}

controls = {

const controls = new THREE.OrbitControls(camera, renderer.domElement);

controls.addEventListener("change", () => renderer.render(scene, camera));

invalidation.then(() => (controls.dispose(), renderer.dispose()));

return controls;

}

lights = {

// Create a directional light with increased intensity

const directionalLight = new THREE.DirectionalLight(0xffffff, 2); // Intensity set to 2 (brighter)

directionalLight.position.set(0, 10, 10).normalize();

scene.add(directionalLight);

// Add ambient light for soft overall illumination

const ambientLight = new THREE.AmbientLight(0xffffff, 1); // Intensity set to 0.5 (softer ambient light)

scene.add(ambientLight);

return { directionalLight, ambientLight };

}

// Initialize camera

camera = {

const camera = new THREE.PerspectiveCamera(85, width / height, 0.1, 1000);

camera.position.set(0, 20, 20);

// Resize the camera aspect when the window is resized

window.addEventListener('resize', () => {

camera.aspect = width / height;

camera.updateProjectionMatrix();

renderer.setSize(width, height);

});

return camera;

}

// Function to center the camera on the model

function centerViewOnModel(model, camera) {

// Compute the bounding box of the model

const box = new THREE.Box3().setFromObject(model);

// Get the center of the model

const center = box.getCenter(new THREE.Vector3());

// Get the size of the model (distance between min and max corners)

// const size = box.getSize(new THREE.Vector3());

// // Move the camera based on the size of the model

// const maxDim = Math.max(size.x, size.y, size.z);

// const fov = camera.fov * (Math.PI / 180); // Convert vertical FOV to radians

// let cameraZ = Math.abs(maxDim / (2 * Math.tan(fov / 2))); // Distance from the object

// cameraZ *= 0.5; // Optionally add some padding

// Set the camera position

camera.position.set(center.x, center.y + 200, center.z);

// Make the camera look at the center of the model

camera.lookAt(center);

}

importedObj = {

const url = await FileAttachment('data/model.glb').url()

return loadObject(url)

}

loadObject = (url) => new Promise ((resolve,reject) => {

const loader = new THREE.GLTFLoader();

// instantiate a loader

loader.load(

// resource URL

url,

// called when resource is loaded

function ( gltf ) {

const model = gltf.scene;

scene.add(model);

centerViewOnModel(model, camera);

resolve(model);

},

// called when loading is in progresses

function ( xhr ) {

return ( xhr.loaded / xhr.total * 100 ) + '% loaded' ;

},

// called when loading has errors

function ( error ) {

reject ("Error in loading")

}

);

})

// Render loop

{

while (true) {

requestAnimationFrame(() => {

// Rotate the scene slightly

// scene.rotation.y += 0.001;

// scene.rotation.z -= 0.001;

// Render the scene with the camera

renderer.render(scene, camera);

});

await Promises.delay(16); // Delay of ~16ms for smooth animation (60fps)

}

}Assignment 2B

Imagery processing and structure from motion (SfM)

Geoprocessing of the UAS data

Completing this assignment you will generate orthomosaic and Digital Surface Model using pictures taken from the UAS Trimble UX5 Rover (Flight mission executed on September 22nd 2016). The Study area is located at Lake Wheeler COA. Additionally you will be able to see the processing results in the generated report and optionally you will be able to export also 3D model and Point cloud as well as Camera calibration and orientation data.

The process can be very time consuming (depending on computational power of your device and desired quality). In order to minimize the processing time, we will process only fraction of the data collected and we will use imagery downsampled by 50%. It will allow us to generate outputs in the classroom.

General workflow (with GCPs)

Preparation

Preparation

Data

OPTION 1 - Small area

You can use a smaller area to accelerate processing. The photos are not downsampled in order to obtain the highest resolution of the outputs.

- download data - photos, log and coordinates of 3 GCPs

OPTION 2 - Full flight, photos original resolution

Warning

LONG PROCESSING TIME!

- download data - photos, log and coordinates of 12 GCPs

OPTION 3: You can use your own data

Software

- Agisoft Metashape Professional (installer)

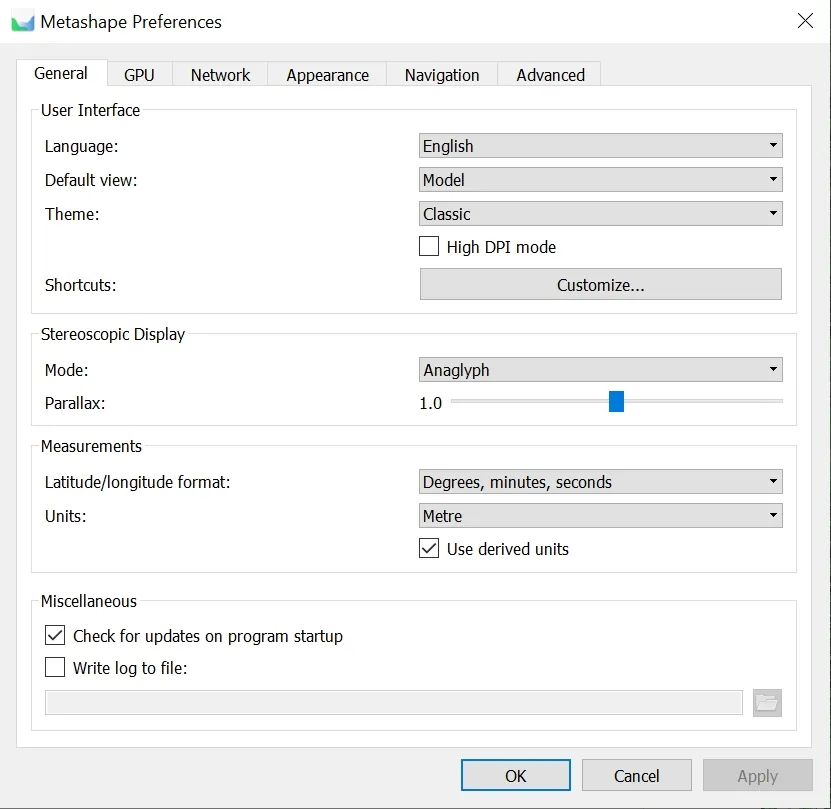

Preferences

When launching Metashape for the first time, some settings need to be adjusted to optimize performance. These settings need to be done only once, at the first use of Metashape, and are loaded by default in subsequent sessions.

Stage 1: Aligning Photos

In order to localize the GCPs first a preliminary simple model needs to be built.

Adding photos

Loading camera positions

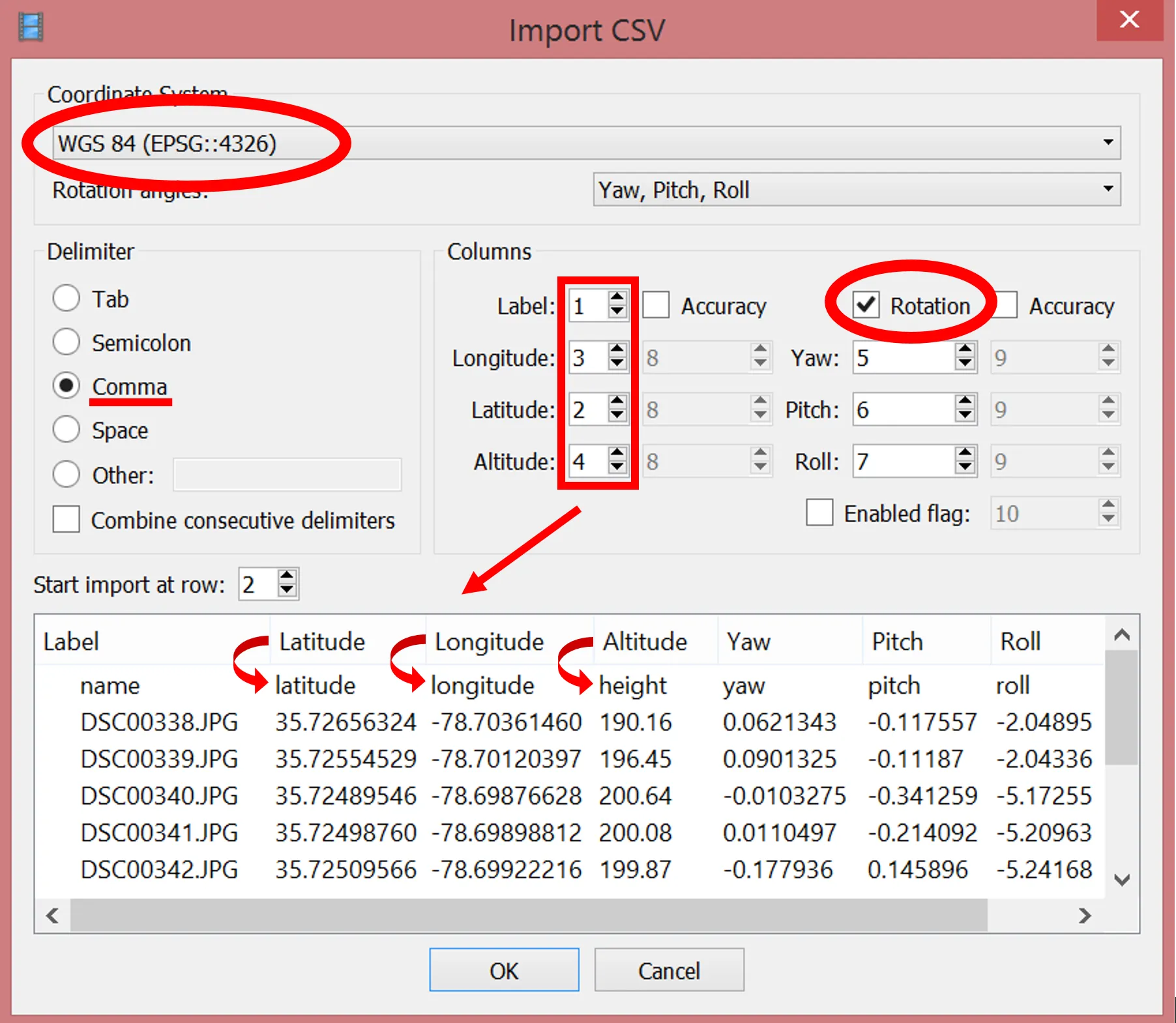

Click Import button ![]() on the Reference pane toolbar > select file containing camera positions information (

on the Reference pane toolbar > select file containing camera positions information (2016_09_22_sample.txt) in the Open dialog.

Note

Agisoft supports the camera orientation files in 5 formats: .csv, .txt, .tel, .xml, and .log. The default format of the Trimble Aerial Imaging log is .jxl. In order to convert the Trimble .jxl log file please run script by Vaclav Petras or use provided already converted log in .txt format.

Make sure that the columns are named properly – you can adjust their placement by indicating column number (top right of dialog box).

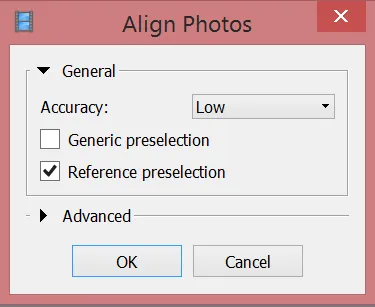

Aligning photos

- Accuracy:

Low - Check:

Reference preselection - Key point limit:

default - Tie point limit:

default - Adaptive camera model fitting:

enabled

Stage 2: Placing GCPs

Loading the GCPs coordinates

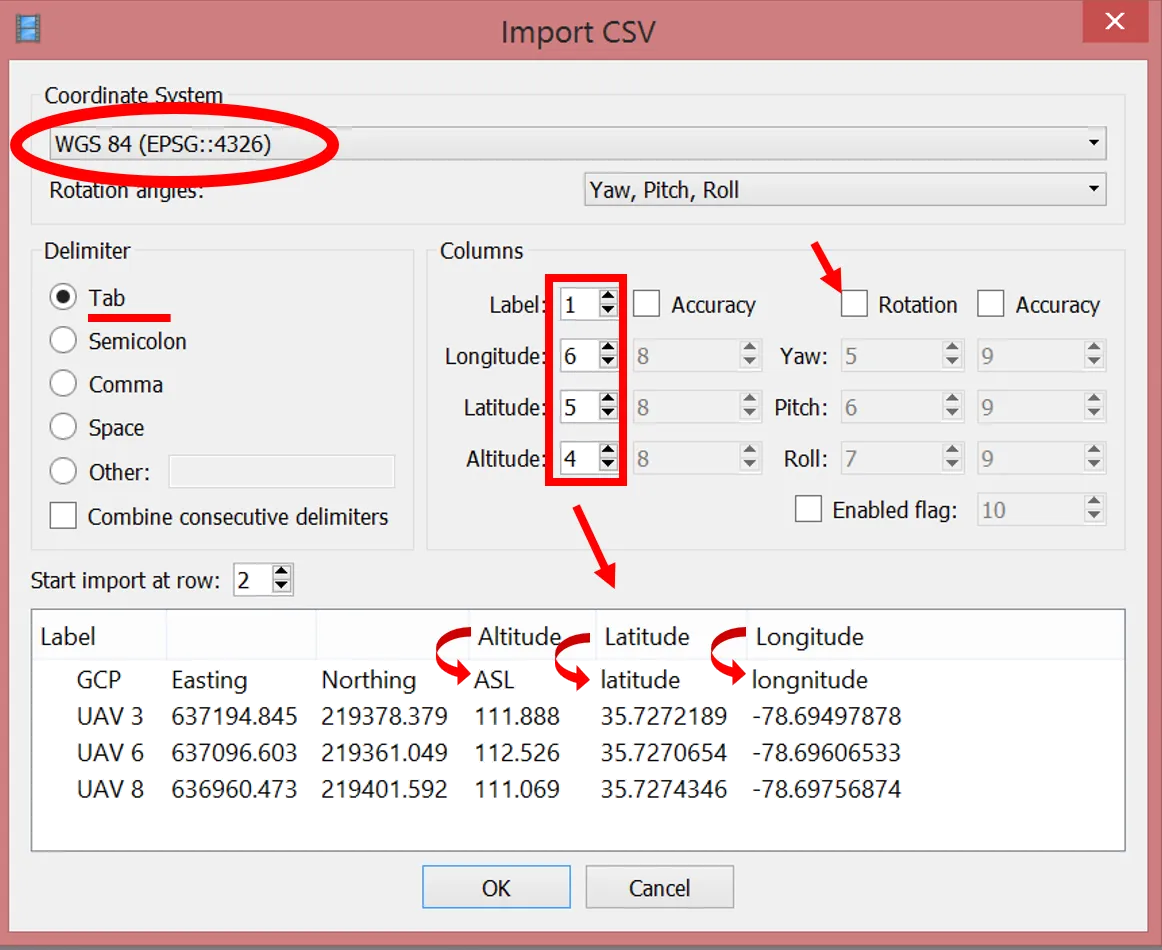

Click Import button ![]() on the Reference pane toolbar > select the file containing Ground Control Points (

on the Reference pane toolbar > select the file containing Ground Control Points (GCP_3.txt) coordinates in the Open dialog.

Make sure that the columns are named properly – you can adjust their placement by indicating column number (top right of dialog box). This time you uncheck the Rotation box since GCPs are stationary and do not need determining yaw pitch and roll angles.

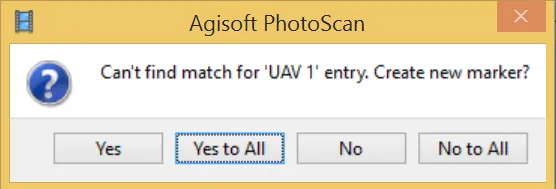

The window with the message: Can’t find match for ‘UAV 3’ entry. Create new marker? will pop up.

Choose ‘Yes to All’ – it will create new marker for each of the named GCPs from the file. They will be listed in Reference pane under the list of photos.

Indicating GCPs on the pictures

Now you need to find each of the GCPs and indicate its localization on all photos depicting it. You can also see the process on the instructional video.

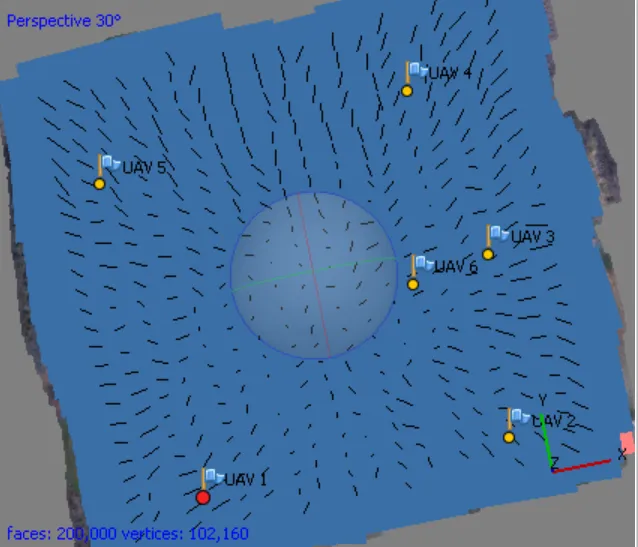

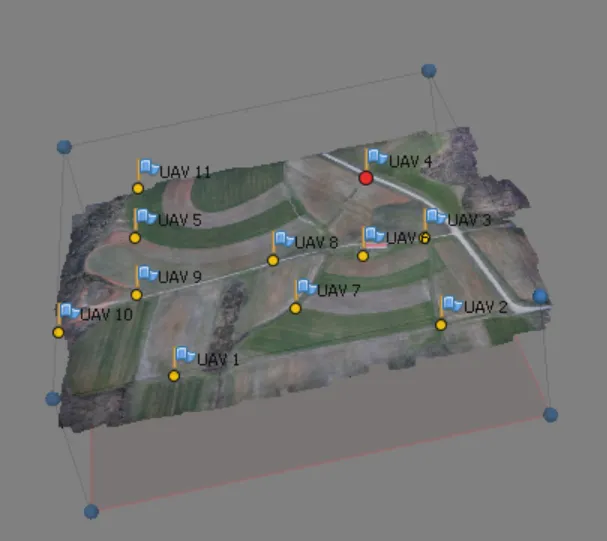

The Model pane shows approximate positions of GCPs, it is better visible if the ‘Show cameras’ option is disabled (Menu > View > Show/Hide Items > Show cameras).

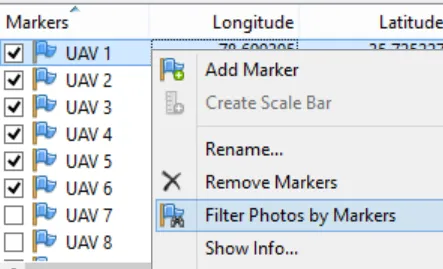

Choose in the context menu of the selected point on the list (right click) > Filter Photos by Marker.

In the Photos pane appear only the images in which the currently selected GCP is probably* visible.

Note

*This is possible because our pictures are geotagged (log indicates the position of each photo), if you will be working with the pictures that are not geotagged or in the area that you don’t know and are not able to “guess” which photo depicts which GCP, it is useful to build the dense point cloud or even mesh and texture (see the following steps) to see the preliminary model of the area in the Model pane, not just a sparse cloud (this is depicted on the figures above).

Open an image by double clicking the thumbnail. It will open in a tab next to the Model pane. The GCP will appear as a grey icon ![]() . This icon needs to be moved to the middle of GCP visible on the photo.

. This icon needs to be moved to the middle of GCP visible on the photo.

Drag the marker to the correct measurement position. At that point, the marker will appear as a green flag, meaning it is enabled and will be used for further processing.

Double click on the next photo and repeat the steps. As soon as the GCP marker position has already been indicated on at least two images, the proposed position will almost exactly match the point of measurement. You can now slightly drag the marker to enable it (turning it into a green flag) or leave it unchanged (gray marker icon) to exclude it from processing.

Filter photos by each marker again. Agisoft adjusts the GCPs positions on the run, so you can locate the GCP on additional images that will appear in the Photos pane.

In this sample processing we will include 3 GCPs. Mathematically, you need to indicate marker positions for at least 3 GCPs. Accurate error estimates can be calculated with at least 4 GCPs, while often at least 5 are needed to cover the center of the project as well, which reduces the chance of error propagation and resulting terrain distortions especially on flat or undulating terrain types.

Click Save ![]() in the toolbar.

in the toolbar.

Optimizing alignment

You can see the errors by clicking on the View Errors icon ![]() . Best results are obtained when the alignment is first optimized based on the camera coordinates only, and a second time based on the GCP only.

. Best results are obtained when the alignment is first optimized based on the camera coordinates only, and a second time based on the GCP only.

Click Optimize icon ![]() in the Ground Control toolbar (check all the boxes).

in the Ground Control toolbar (check all the boxes).

Warning

Depending on the version of Metashap the optimize icon may appear as a star.

Optimizing based on the GCP Markers

In the Ground Control pane:

- disable all the camera coordinates (select one > press Ctrl+A > right-click >

choose Uncheck). - enable all the GCP Marker coordinates (select one > press Ctrl+A > right-click >

choose Check).

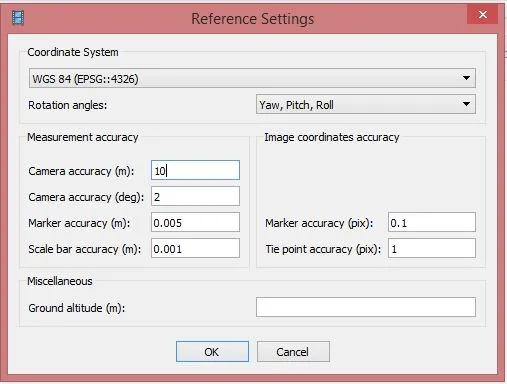

Click Settings icon ![]() in the Ground Control toolbar:

in the Ground Control toolbar:

- leave the default values (Marker accuracy (m) = 0.005 m)

Click Optimize icon ![]() in the Ground Control toolbar (leave all options at the default).

in the Ground Control toolbar (leave all options at the default).

Click Save ![]() in the toolbar.

in the toolbar.

You can now see how much the errors were reduced through optimization by clicking on the View Errors icon ![]() .

.

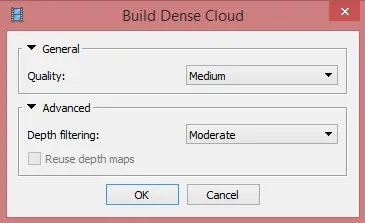

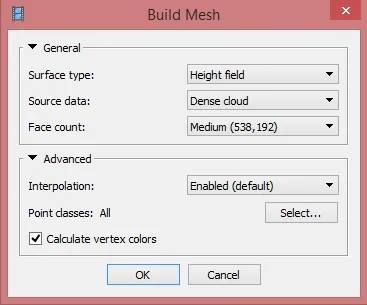

Stage 3: Building complex geometry

Before starting the Build Dense Cloud step it is recommended to check the bounding box of the reconstruction (to make sure that it includes the whole region of interest, in all dimensions). The bounding box should also not be too large (increased processing time and memory requirements).

The bounding box can be adjusted using the Resize Region ![]() and the Rotate Region

and the Rotate Region ![]() tool from the toolbar. Make sure that the base (red plane) is at the bottom.

tool from the toolbar. Make sure that the base (red plane) is at the bottom.

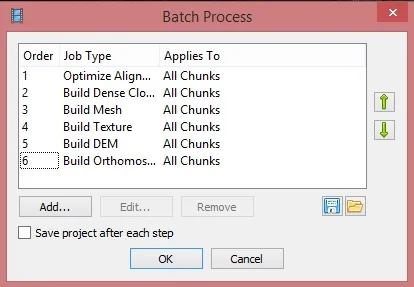

The next steps will be time consuming (depending on the desired quality and number of pictures). You can execute them one step at the time or you can also set a batch processing that does not require user interaction until the end of geoprocessing (especially useful in case of Ultra High quality that requires even several days of processing). The batch processing will be explained at the end of this section.

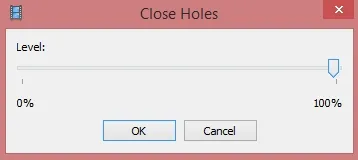

Editing geometry

Sometimes it is necessary to edit geometry before building texture atlas and exporting the model.

If the overlap of the original images was not sufficient, the model can contain holes. In this case, to obtain a holeless model, use the Close Holes command. It is crucial if you want to perform any volume calculations - in this case, 100% holes need to be closed.

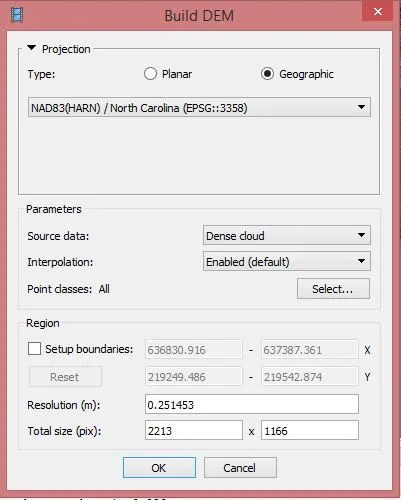

Building DEM

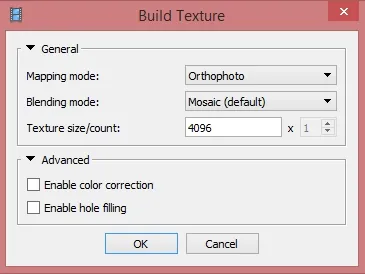

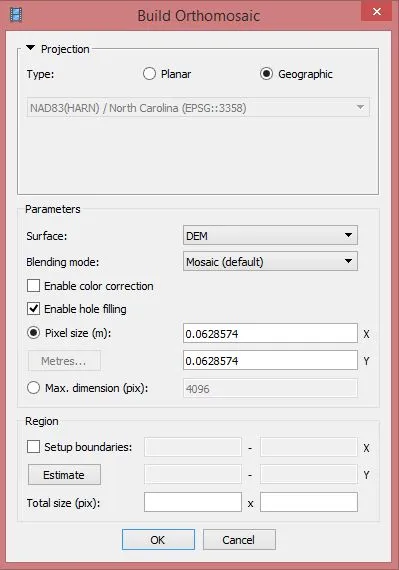

Building Orthophoto

Batch processing

Stage 4: Exporting results

This option will be disabled if you are working in the demo version.

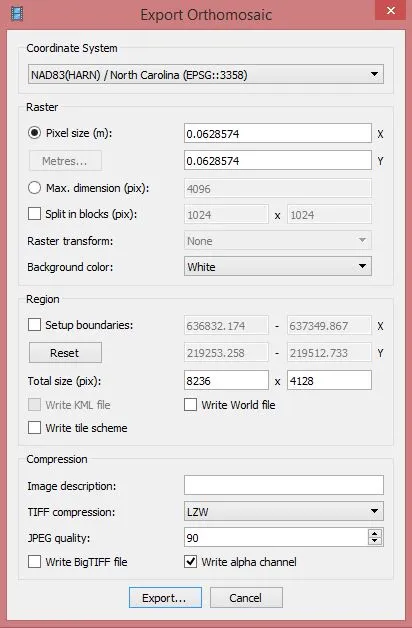

Orthomosaic

File > Export Orthophoto > Export JPEG/TIFF/PNG

- Projection Type:

geographic (default) - Datum:

WGS 1984 - Write KML file (footprint) and World file (.tfw) = check if desired (if left unchecked, georeferencing information will still be contained in the GeoTIFF .tif file)

- Blending mode:

Mosaic (default) - Pixel size:

leave default

Leave default values. Fill out the desired name and save as type TIFF/GeoTIFF (*.tif).

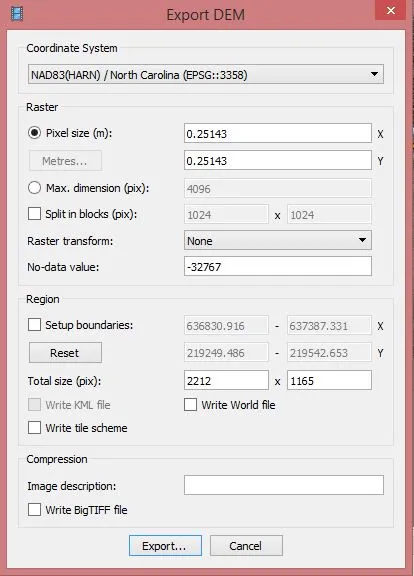

Digital surface model

Generating report

Model

Point cloud

Camera calibration and orientation data

Render 3D Model

Here is an exmaple of the rendered 3D model exported as a gltf file using Three.js